2025: The Year AI Grew Up And What That Means For Us

2025 taught us what we've been saying all along: stop chasing models, start building evaluation frameworks. Multi-model strategies are mandatory now. Here's what that means for how we build 2026

Hey AgentBuilders,

Hope everyone is wrapping up the year with a blast.

I am excited to bring new things for you in 2026 - new formats, new engagements, new opportunities - more on that later.

What a year this has been in AI.

2025 will be remembered as the year AI stopped being a demo and started being infrastructure. Not because of some breakthrough model drop, but because the questions changed from “What can AI do?” to “What does AI actually cost, and can we trust it?”

If you’ve been part of this community, you know we’ve been asking those questions all along.

You should get off the Model release hamster-wheel

2025 gave us permanent model season. GPT-5 variants, Claude 4.x, Gemini 3, Grok updates, Llama 4, Qwen 3, DeepSeek – the release calendar was relentless. Every few weeks, another “state of the art” benchmark screenshot on LinkedIn.

But here’s what actually happened: at the frontier, models converged. Different models won different benchmarks – one best at reasoning, another at coding, another at multimodal tasks – but no single runaway winner emerged. The gap between “best” and “second best” became noise. You won’t believe how much time I spend answering questions about model capabilities and fitness for specific use-cases.

This is exactly why I talk about defining your success metrics before picking tools. Because if you’re chasing the latest model release, you’re solving the wrong problem. The question isn’t “which model is best?” – it’s “which model combination solves my specific use case at acceptable cost and latency?”

This is the takeaway: Stop benchmarking models in isolation. Start building evaluation frameworks that test YOUR workflows, YOUR data, YOUR edge cases. The enterprises that won in 2025 aren’t the ones with access to the newest model – they’re the ones who knew how to route workloads intelligently across multiple models.

Multi-model Strategy

2025 forced something we’ve been teaching in AgentBuild Circles: you need a multi-model strategy.

Enterprises stopped betting everything on one provider and started orchestrating across several models based on cost, latency, and regulatory requirements. Some queries went to expensive frontier models, others to cheap specialized models, some to open-weights running on-prem for sensitive data.

Our Cohort 1 members learned this the hard way during their 6-week sprints. They discovered that:

Agent workflows rarely need frontier models for every step

Routing logic matters more than raw model capability

Evaluation isn’t a one-time check – it’s continuous monitoring across your model fleet

The tooling for orchestration, routing, and evaluation quietly became one of the most competitive layers in the stack. This is where the real differentiation happens now.

The economics shifted and created opportunities

2025 saw an AI price war. Token prices crashed. Inference costs plummeted. What cost $100 in early 2024 cost $10 by late 2025.

Great for users. Terrible for anyone trying to compete on raw API access.

But here’s the opportunity: defensibility moved up the stack. The winners aren’t competing on model access – they’re competing on evaluation quality, workflow integration, and domain-specific data.

This is why open-source had its best year. LLaMA 4, Qwen 3, DeepSeek variants, Mistral families reached “good enough” performance for most enterprise tasks, especially with retrieval and domain tuning. Even when companies ran these through managed services, having strong open options changed the power dynamic with proprietary vendors.

For builders: Your competitive advantage isn’t which API key you have. It’s how well you’ve instrumented your system, how fast you can evaluate new models, and whether you’ve built evaluation into your architecture from day one.

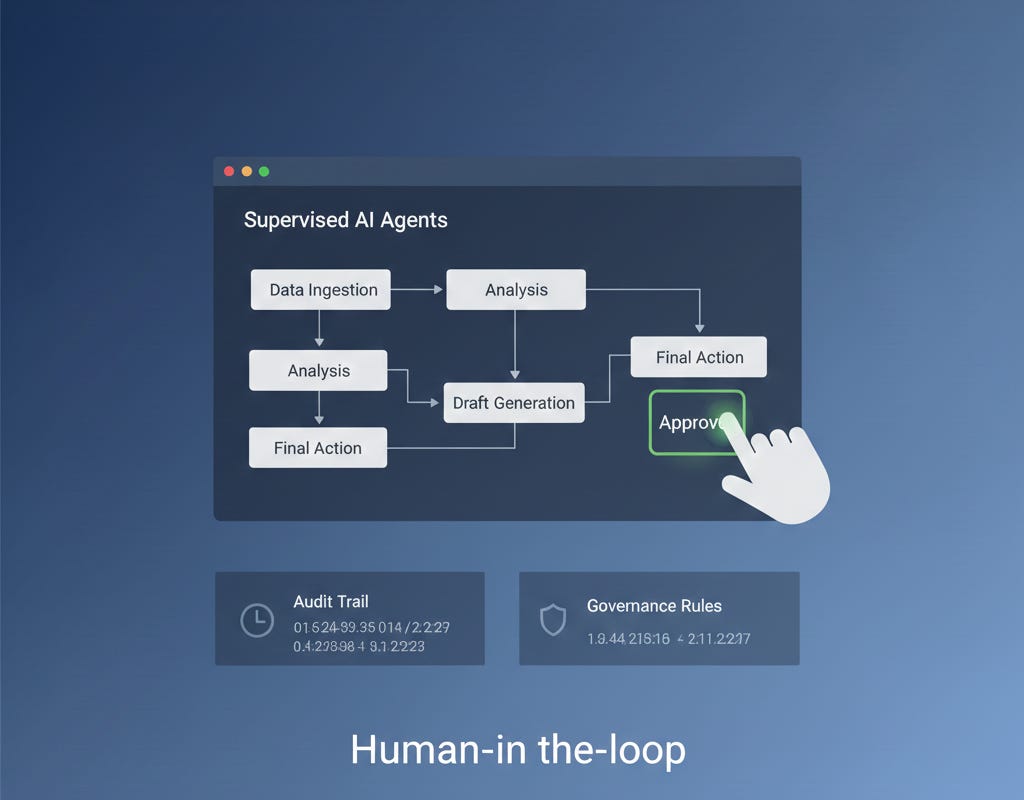

Agents became real (but Supervised)

Agents moved from demos to production. Not fully autonomous AI colleagues – more like heavily supervised macros that can take actions: updating records, triggering workflows, interacting with other tools.

This is exactly the territory AgentBuild explores. We knew the bottleneck wasn’t “can the model do it?” but “can the organization trust it?”

That’s why governance, approvals, and audit trails dominated enterprise conversations in 2025.

The Reverse Strategy Framework starts with defining success metrics and evaluation criteria precisely because of this. You can’t deploy agents at scale without knowing how to measure when they’re working and catch when they’re not.

The HYPE corrected mildly

2025 brought a reality check. The grandest claims – fully automated knowledge work, instant abundance – didn’t materialize.

But AI embedded itself deeply into search, office software, customer service, marketing, and coding.

The question evolved from “Is AI real?” to “What actually works, and at what cost?”

Investors and executives got more skeptical of vague AI pitches. But they stayed convinced it’s a long-term growth driver. The bar just got higher.

This is our opportunity. While others pivot to the next hype cycle, we focus on production readiness, evaluation frameworks, and systems that actually ship value.

What this means for 2026

If 2023-24 were “wow, this is possible” and 2025 was “what actually works?”, then 2026 is “who can build this into durable products with healthy economics?”

The winners won’t be the ones with the best model access. They’ll be the ones who:

Built evaluation-first architectures

Mastered multi-model orchestration

Built reliable data infrastructure

Focused on workflow integration over raw capability

Measured success in business outcomes, not benchmark scores

2026 is our year. Not because we have access to better models, but because we understand building the infrastructure layer that actually matters: evaluation, orchestration, and production-grade thinking.

I am excited!

Let’s build.

- Sandi

Found this useful? Ask your friends to join.

We have so much planned for the community - can’t wait to share more soon.