Code Breakdown: Learn how to build agents using Python

I have built a smart email assistant agent, understand the code at depth as I break it down, find link to github repo, try it out yourself

Hello AgentBuilders 👋

Today, I'm excited to walk you through the Smart Email Responder - an AI agent that automatically processes your Gmail inbox, filters out noise, and creates draft responses for important emails. Let's break down the code and learn how this intelligent agent works under the hood.

If you want to build an agent with a team join AgentBuild Circles by 8th August.

Code Breakdown

(Full code in GitHub, find link at the end of this blog)

In this blog, I breakdown the code in different parts, mainly aligned to the components of an AI Agent. If you would like to dive deeper into the components of an AI Agent and understand how each part is crucial for success in enteprises, watch my webinar - Anatomy of an AI Agent.

In the following subsections I explain ech part of the code that make up the Smart Email Responder agent. I have simplified the code blocks in this block so it is easier to understand. You might see some differences in the actual code in GitHub.

Part 1: The Import Section

What's happening here?

Think of imports as gathering your tools before starting work. We're pulling in:

Python Standard Libs: These are foundational for managing config (

os), time logic (datetime), and data (json).dataclass&typing: These give structure to your logic, like defining schemas without needing a database.Environment Management (

dotenv): Keeps secrets like API keys out of your source code. This is a best practice for security and portability.Google API Libraries: These connect us to real user workflows (email + calendar)

(note: if you are using a different email provider, import appropriate libraries)OpenAI SDK: This is the reasoning engine, the brain of the agent.

(note: import appropriate libraries for other LLM providers)Date Parsing: Humans don’t say "2025-07-28T15:00Z", they say "next Thursday at 3PM". We need a bridge between those.

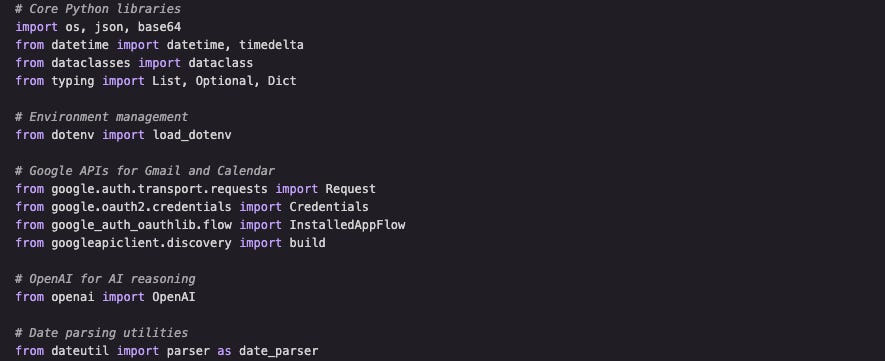

Part 2: Data Structures - The Agent's Memory

Instead of juggling messy dictionaries, we create clean, typed data structures. This makes our code more reliable and easier to debug. Each `EmailAnalysis` object contains everything our agent learned about an email - like a detailed report card.

These structures hold what the agent has understood about a particular input, in this case, an email. We call this the agent’s "working memory" because it’s where the agent stores conclusions drawn from its reasoning process.

It’s not memory in the long-term or episodic sense (like remembering past conversations), but rather a structured summary of its current thought. Think of it like a notepad the agent uses while reading an email - it jots down what kind of message this is, whether a reply is needed, what to say, and so on.

In production systems, this kind of structured "memory" matters because:

It makes the agent’s reasoning transparent and debuggable.

It decouples AI outputs from downstream logic (e.g., whether or not to send a reply).

It creates a formal data contract that you can log, test, or audit.

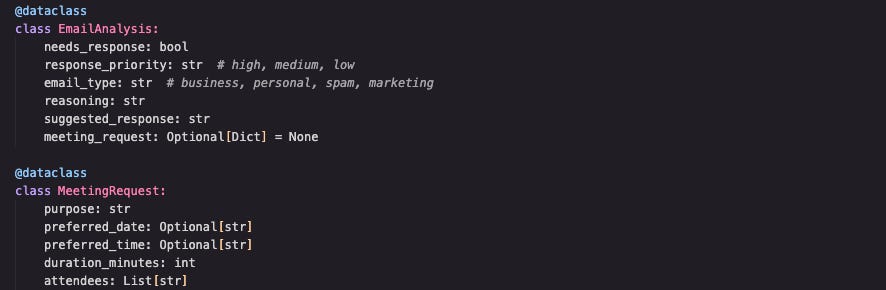

Part 3: The AI Brain - LLM Integration

Every AI agent needs to interact with an LLM. This is the core part of an AI agent that helps with reasoning and decision making, hence this component is call the “brain“ of and AI agent.

The heart of our agent lies in the `analyze_email_with_reasoning()` method. Here's where the magic happens:

This is a call to OpenAI’s Chat API using the gpt-4o-mini model. The messages parameter defines a two-role conversation:

The system message sets the behavior and expertise of the model (in this case, an "expert email assistant"). This provides high-level context and constraints that steer all subsequent replies.

The user message contains the actual prompt, our specific instruction for analyzing the email, following predefined rules, and returning structured JSON.

Setting temperature=0.1 is a critical production choice. In LLMs, temperature controls randomness. A lower value like 0.1 makes responses deterministic and consistent - ideal when you want repeatable, predictable behavior rather than creative variability.

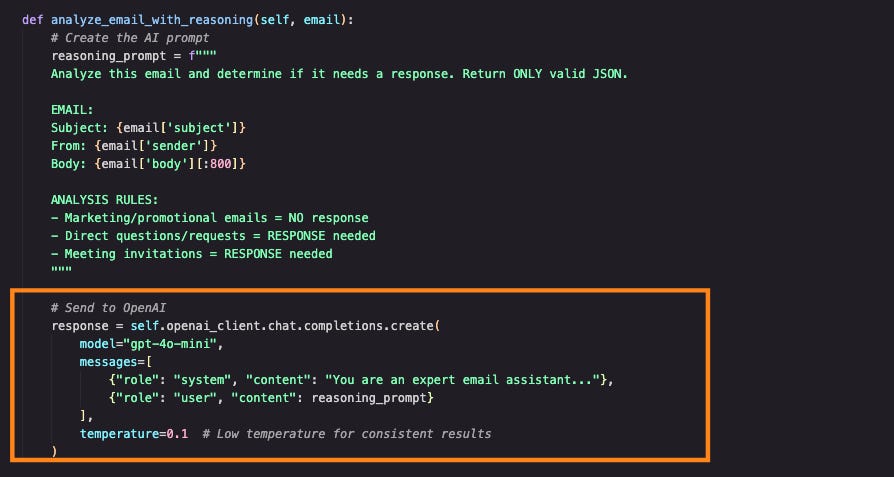

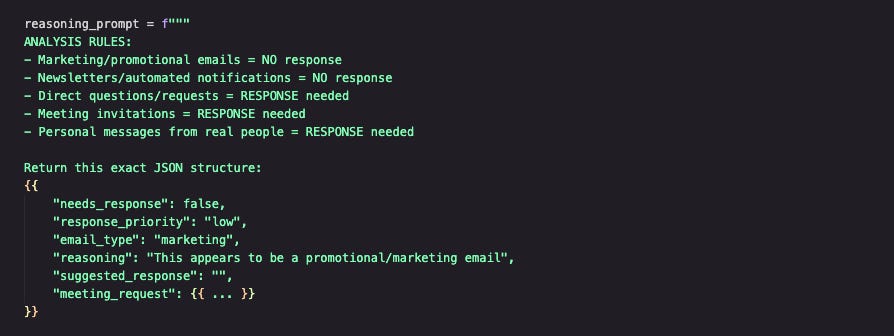

Part 4: Prompt - The Secret Sauce

Our prompt is carefully crafted to guide the AI's reasoning. We provide clear rules, examples, and a structured output format. This eliminates ambiguity and ensures the AI returns exactly what we need. The output structure in JSON makes parsing reliable and predictable.

In short, this block converts a human-like instruction into a structured decision using a model that's primed to reason, not imagine. It’s where AI moves from generic capability to task-specific intelligence, anchored by context, rules, and output expectations.

Dive deeper into the best practices of Prompt Engineering in the webinar: Mastering Prompt Engineering and Planning

Part 5: Tool Calling - API Integration gets the job done

Agents need tools to perform tasks - and this is essential for enteprise workflows. There is minimal use of an agent that cannot integrate with enteprise systems, datastores, and external applications. Our agent seamlessly integrates with multiple APIs. It connects to Gmail and Google Calendar and takes action - like reading emails, creating drafts, and booking calendar slots.

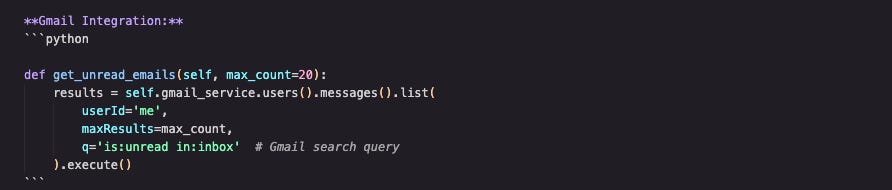

Gmail Integration

The get_unread_emails() function leverages the Gmail API to fetch unread emails from the user's inbox. Here's what each part does:

self.gmail_serviceis the authenticated Gmail API client..users().messages().list(...)is the API call to list messages.userId='me'uses the authorized user's account.q='is:unread in:inbox'is a Gmail-style search query to narrow the scope to unread emails only.maxResults=max_countensures we limit the number of emails we process in one run.

This is how the agent connects perception to input: it gathers the data it needs to analyze. And importantly, it does so using secure, enterprise-ready APIs, not scrapers or hacks. That makes this design reliable, repeatable, and compliant.

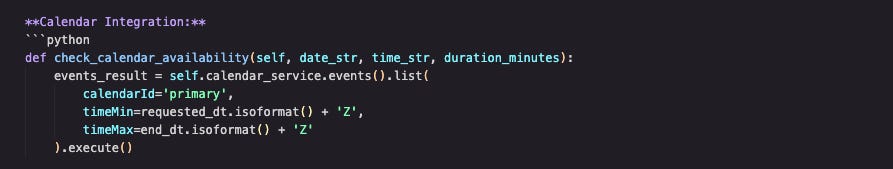

Calendar Integration

When the AI detects a meeting request in an email, it doesn't just suggest a response, it checks your real-time calendar availability. Here’s what’s happening:

self.calendar_serviceis the authenticated Google Calendar client..events().list(...)fetches all events within the desired time window.calendarId='primary'targets the user’s default calendar.timeMinandtimeMaxdefine the time range to check—converted to ISO 8601 format for API compatibility.

This integration lets the agent act like a true executive assistant, cross-referencing proposed meeting times with the user’s actual schedule to avoid conflicts. In an enterprise setting, that’s table stakes for automation.

Part 6: The Main Workflow - putting it all together

At the heart of the Smart Email Responder lies the run() method, our agent’s orchestrator. It implements a simple but powerful perception–reasoning–action loop. Despite the complexity under the hood, the main workflow is clean and understandable. Fetch emails → Analyze with AI → Take appropriate action, that’s it.

This loop fetches unread emails, analyzes them using an LLM to determine importance, and takes action - either drafting replies or scheduling meetings. Each step is modular, enabling independent testing, fault isolation, and future extensibility. In production, this pattern scales well because it supports retries, error handling, and observability (e.g., logging decision outcomes, tracking API failures). While minimal in code, this loop models a classic event-driven architecture: it continuously listens, decides, and acts. It’s control flow for an intelligent, real-time agent system.

Future Improvements: Building a “Super” Smart Email Responder

While our current agent is powerful, it is very basic compared to enterprise needs. I will evolve this code, and you are welcome to contribute.

Following are the improvements I am planning for this agent, there is so much to do:

Memory & Context Engineering

Currently, each email is analyzed in isolation. Adding memory would help the agent understand email threads, recognize patterns in sender behavior, and provide more contextual responses. Seconly, adding long-term memory will also help to align responses to company messaging guidelines, maintain brand tone, and utilize enteprise knowledge to make repsonses useful. There is so much opportunity here.

Advanced Prompt Engineering

Instead of analyzing emails individually, we'd consider the full conversation context, sender relationship, and historical patterns.

Learning & Adaptation

The agent could learn from users’ editing patterns and gradually improve its response suggestions to match their communication style. Drive personalization. Beautiful.

Multi-Modal Understanding

Handle emails with attachments, images, and complex formatting for richer understanding. That would be so powerful.

Multi-agent System

Scale from a single-agent loop to a multi-agent architecture, where specialized agents handle specific responsibilities: a Classifier Agent identifies the email type, a Planner Agent coordinates response workflows, and Tool-Use Agents interface with APIs.

Key Takeaways for Agent Builders

Designing intelligent agents isn't just about plugging in a language model, it's about orchestrating reasoning, structure, and action into a reliable system. Here's what this project teaches us:

Structure enables scale: Typed data models like

EmailAnalysisserve as the agent's working memory, making its reasoning traceable, testable, and debuggable. In production, structure is how you prevent chaos.Prompting is programming: A well-crafted prompt is logic encoded in natural language. Treat your prompts like code: test them, version them, and design them with precision.

LLMs need tools: An agent that can reason but not act is just an expensive notepad. Tool calling, through APIs and services, is what turns decisions into outcomes. Integration is the bridge between intelligence and execution.

Simple loops can scale: The perception–reasoning–action loop at the heart of this agent reflects a classic event-driven design. Start with a clean loop, then layer in retries, observability, and memory for long-term resilience.

Ultimately, the smartest agents aren't just the ones that think, they're the ones that do, reliably, inside real-world workflows.

Ready to Build?

The Smart Email Responder demonstrates how to build a practical AI agent that solves real problems. It combines AI reasoning with API integrations to create genuine value for users.

Want to try it yourself?

👉 Check out the full code here: AgentBuild GitHub Repository 🚀

👉 Join AgentBuild Circles to learn to build agent in groups.

What agent will you build next?

Share your ideas in the comments below! 👇

Happy building,

Sandipan.

AgentBuild community.

Super cool! Love it!