Stop Building AI Systems You Can’t Measure

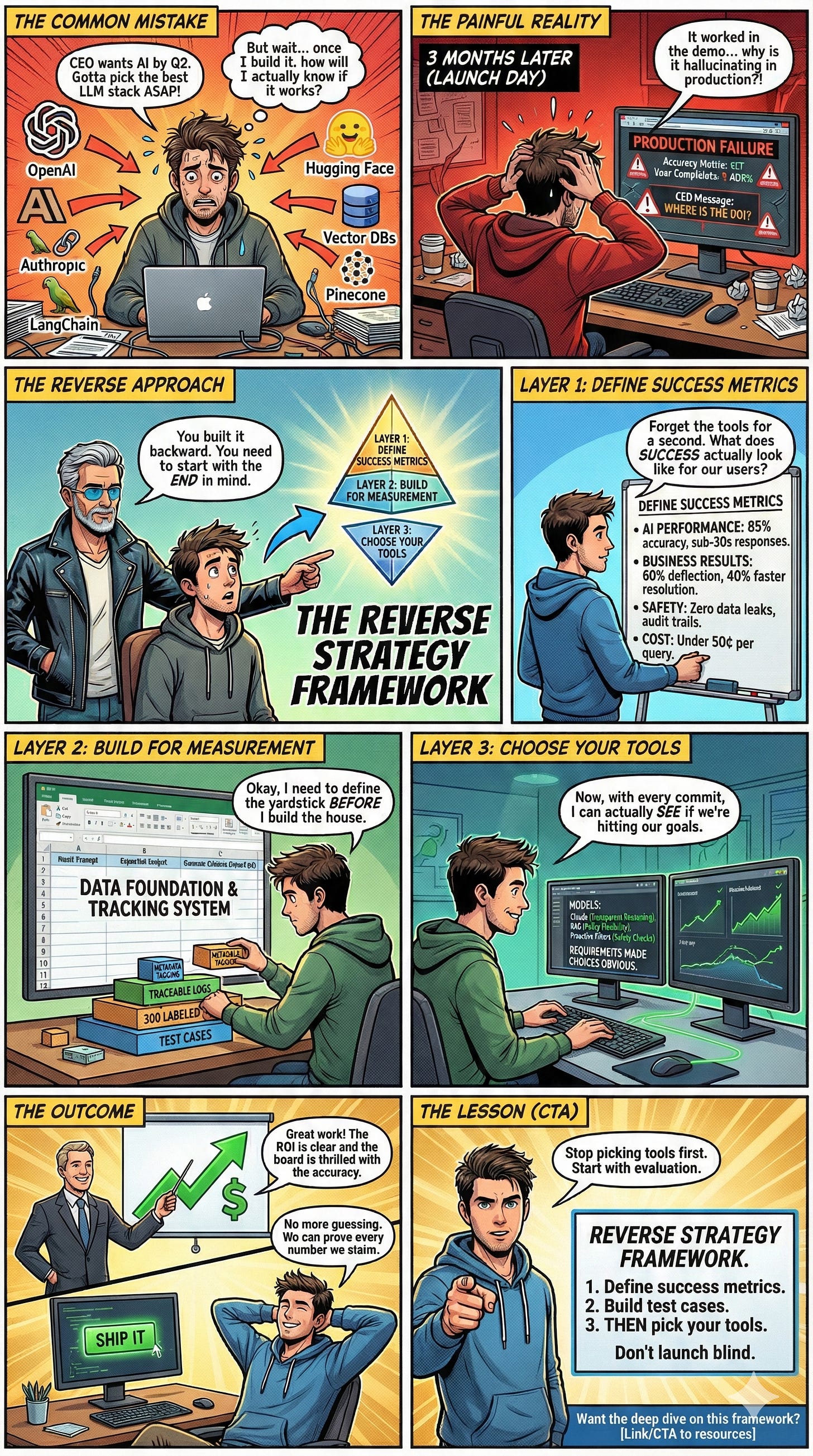

Your demo worked great. Leadership loved it. Now someone asks "Is it actually working?" and you have no answer. Here's why 95% of AI projects fail - and the reverse strategy that fixes it...

Picture this: Your team just demoed a shiny new AI chatbot. Leadership is nodding. The technology stack sounds impressive - GPT, LangChain, Pinecone. Three months of work, and it handles those carefully selected demo questions beautifully.

Then someone asks: “How do we know it’s actually working?”

Silence.

This is the trap 95% of AI teams fall into. They pick tools first, build something that works in demos, then realize they can’t answer the only question that matters: Is this delivering value?

The Teams That Win Do It Backwards

The most successful AI implementations I’ve seen follow what I call the Reverse Strategy Framework. Instead of rushing to pick models and frameworks, they start by figuring out how to measure success, design systems to capture those measurements, and only then choose their tools.

Sounds simple, right? But it changes everything.

A Banking Chatbot That Almost Failed

Let me show you what this looks like in practice. A retail bank came to me with their customer support chatbot already planned out: GPT-4, LangChain, Pinecone. They just needed help launching it.

I asked three questions:

How will you know if the chatbot makes correct refund decisions?

When something breaks, how will you debug it?

What does each customer interaction actually cost?

They had no answers. These questions don’t surface when you start with tools—they only emerge when you start with measurement.

The Framework That Actually Works

Layer 1: Define Success Metrics

Before touching any code, we defined what “working” meant across four categories:

AI Performance: 85% accuracy on fee refunds, sub-30-second responses

Business Results: 60% deflection rate, 40% faster resolution times

Safety: Zero customer data leaks, complete audit trails

Cost: Under 50 cents per query (vs. $5 for human support)

This immediately revealed three massive problems with their original plan. They had no test cases, no way to trace AI decisions, and no handling for customers with multiple accounts. All invisible when picking tools, obvious when defining measurements.

Layer 2: Build for Measurement

We designed two systems simultaneously:

- the data foundation the AI needs

AND

- the tracking system to see what it’s doing.

Every policy section got tagged with metadata. Every decision created a traceable log. We built 300 labeled test cases from historical data.

The payoff? When accuracy dropped in month two, we could see exactly why: the AI was calculating refund eligibility from the wrong timestamp. Fixed in hours, not weeks.

Layer 3: Choose Your Tools

Only now did we select models and frameworks. Our requirements made the choices obvious: Claude for transparent reasoning, RAG for policy flexibility and citations, proactive filters for safety checks.

The Dashboard That Changed Everything

Every Monday, leadership sees real-time metrics: queries handled, satisfaction scores, cost per query, quality issues. When accuracy dips or costs spike, alerts fire automatically.

Week six: Fee refund accuracy dropped to 81%. Alert triggered. Team traced it to a missing policy update. Fixed in 4 hours.

Week eleven: Customer satisfaction on transfers hit 3.8. Logs showed technically correct but confusing responses. Simplified templates. Back to 4.3 within days.

Your Choice: Design or Firefighting?

Yes, this is more upfront work. The question is: Would you rather spend two weeks designing measurements before you build, or six months firefighting a system you can’t see into after launch?

Would you rather walk into your CFO’s office with a dashboard showing 60% deflection and $0.50 per query, or say “We think it’s working, but we’re not sure how to measure it”?

If you can’t measure it, you can’t improve it. If you can’t prove it works, you can’t defend the investment.

The reverse strategy isn’t about being cautious - it’s about being successful. Start with measurement. Build for observability. Let requirements drive tools.

Your future self (and your CFO) will thank you.

Free Templates That Help You Get Started

I’ve created a few templates that can help you get started. Find the links in the description of the video. Download them, review them, and get in touch if you have questions.

Watch the full breakdown in the video, where I walk through all three layers with real examples and decision points.

Want to dive deeper into production AI systems? Subscribe to AgentBuild for weekly practical tutorials on building reliable AI agents.

Found this useful? Ask your friends to join.

We have so much planned for the community - can’t wait to share more soon.