Why Multi-Agent Systems Are Eating Enterprise AI (And How Not to Choke)

Multi-agent systems are the new frontier - in this issue - why 40% of projects will fail, the hidden pain points of orchestration, and how to build agent teams that actually cooperate in production.

Hey AgentBuilders,

In this edition I am sharing some of my experience talking, building, and experimenting with multi-agent systems in enterprises.

We moved quickly from “How to make LLMs work in production“ to “How to make agents work with humans“ - this happened in early 2025 and most, including me, claimed 2025 will be the year of AI Agents.

AI Agents promised automation and are delivering on the promise in many cases, however, suddenly, the architecture complexity went up, not down.

Welcome to the messy, fascinating world of multi-agent systems.

Today I answer the following questions that often come up in my meetings:

Why are so many multi-agent projects failing despite solid tech?

What makes agents cooperate in production?

How do you know when you really need multiple agents (vs one smart one)?

What metrics prove agentic systems are delivering business value?

How do you build resiliency into your agent architecture from day one?

The State of Play: We’re All Building Agent Teams Now

Here’s where we are. The good news is that multi-agent architectures are becoming the default, not the exception. Well I must stress this is the world I see around me - which is well-funded startups or large Enteprises. Most new enterprise AI deployments now include some form of agentic capability. I think we’re past the proof-of-concept stage (a.k.a concept has been proven) - companies are shipping this stuff to production.

The reality check? We’re also seeing that over 40% of agentic AI projects will be scrapped by 2027. That’s not a prediction, that’s a warning sign. Most failures aren’t because the technology doesn’t work - they fail because teams underestimate what it takes to make multiple agents actually cooperate.

The shift from one smart agent to many specialized agents sounds logical on paper. However, in pratice it’s like going from managing a solo consultant to managing a committee where everyone speaks a slightly different language and has their own agenda.

What We’re Really Talking About Here

Let me define this from first principles, because “multi-agent system” gets poured around like coffee (or beer) at a tech conference.

A multi-agent system is a collection of autonomous reasoning units (agents) that can perceive their environment, make decisions, take actions, and communicate with each other to achieve goals that are difficult or impossible for a single agent to accomplish alone.

That’s it. Not magic. Not sentient. Just distributed problem-solving with software that can reason and act.

Why does this matter more than just building one really smart agent?

Three reasons.

Modularity. When something breaks, you replace one specialist agent, not your entire system. Your customer service agent stays online while you fix your inventory agent. Ehem, microservices.

Emergent capability. Two agents that handle scheduling and resource allocation separately might, together, discover optimization patterns neither was programmed to find. This isn’t theoretical - I’ve seen it happen in transport logistics.

Distributed reasoning. Complex problems don’t fit in one context window. A legal contract review might need one agent for compliance checks, another for financial terms, and a third for risk assessment. Each agent maintains deep context in its domain.

The architectural levers you need to understand:

memory management (who remembers what and for how long)

agent coordination (how do agents decide who does what and when)

orchestration (do you have a conductor or is it a jazz ensemble)

governance (how do you audit, version, and control agents that make real decisions)

The Three Pain Points Nobody Warns You About

Pain Point One: The Coordination Nightmare

You build three specialist agents. They’re brilliant individually. Put them together and they either duplicate work, contradict each other, or get stuck in a loop asking each other the same question.

Why this happens: You didn’t design the interaction topology. Just started building - sounds familiar? Research shows that network structure between agents matters as much as the agents themselves. A fully connected network where every agent talks to every other agent creates chaos. A strict hierarchy can create bottlenecks.

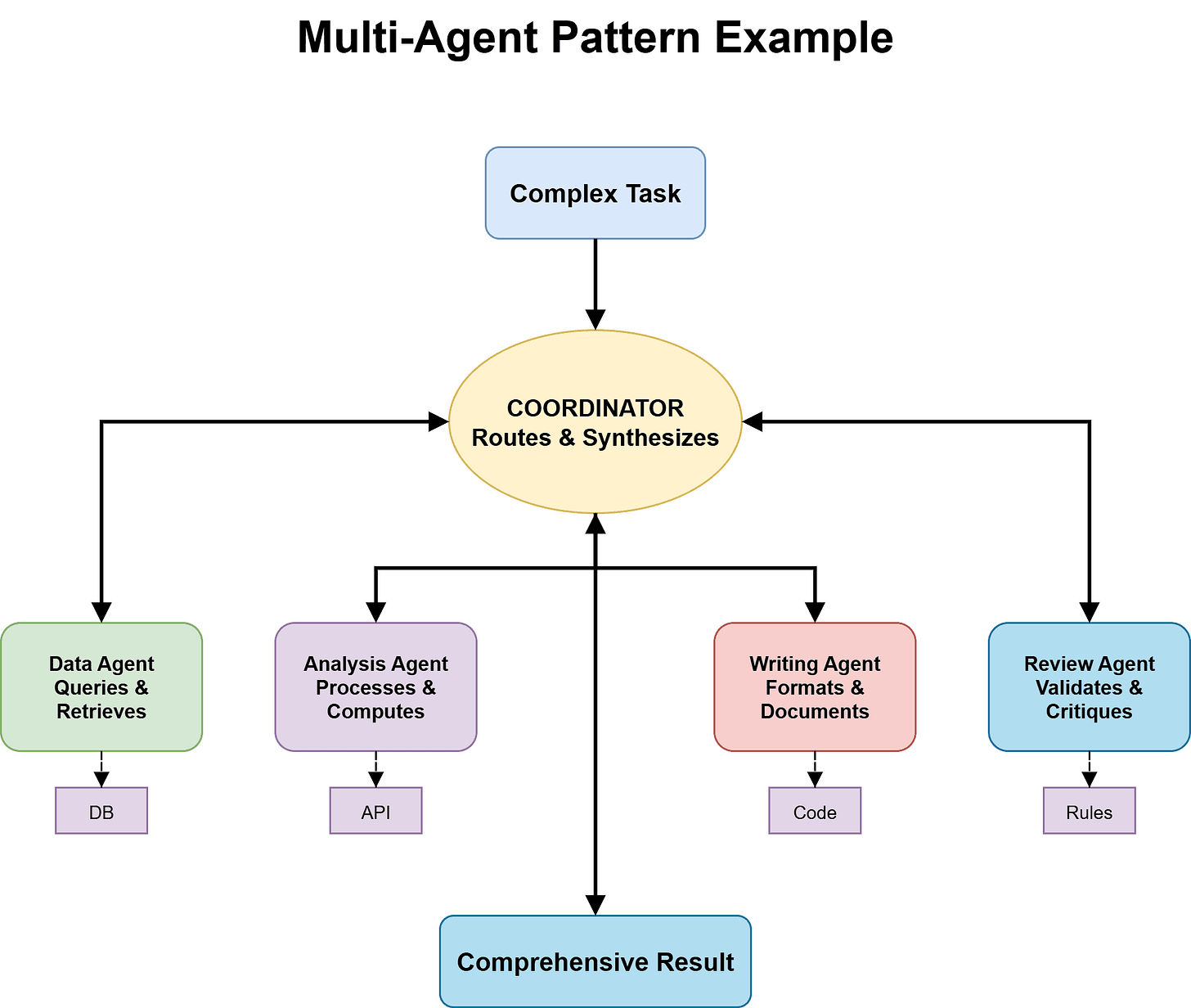

What actually works: Start with a hub-and-spoke pattern for your first multi-agent system. One coordinator agent that understands the overall task, and 2-4 worker agents that are specialists. The coordinator routes work, manages dependencies, and integrates results. Workers don’t talk to each other directly - they report back to the hub.

Once that’s stable, you can experiment with peer-to-peer patterns for specific use cases.

Pain Point Two: Agent-Washing

This is the multi-agent equivalent of blockchain projects that should have been a database.

I’ve reviewed architectures where a company built five agents to handle a workflow that a single well-prompted LLM with function calling could have solved. They did it because “multi-agent” was in the 2025 roadmap, not because the problem needed it.

The test: Can you clearly articulate why agent specialization provides value? If your agents are just different prompts hitting the same model with the same context, you don’t have a multi-agent system - you have a prompt library with extra steps.

When multi-agent actually makes sense: Different agents need different models (small, fast model for routing; large model for complex reasoning). Agents need to maintain separate, long-running context (e.g., one agent tracks customer history over months; another handles real-time inventory). True parallelization provides value (multiple agents processing different parts of a dataset simultaneously). Specialist agents need different tools, APIs, or knowledge bases.

Value clarity exercise: Before you build, write down the specific capability each agent adds, why that capability can’t be a function call or a prompt variation, and the metric that proves this agent is worth the operational overhead. If you can’t fill that out clearly, you might not need multiple agents yet.

Pain Point Three: Production is Where Dreams Go to Die

Your agents worked beautifully in testing. In production, one agent starts hallucinating, another gets stuck in a retry loop, and you have no idea which agent made the decision that just cost you $50,000.

The operational reality: Agents in production need the same rigor as any distributed system - observability, failure detection, versioning, drift control.

What you actually need starts with observability at the agent level. Log every agent decision, input, and output with timestamps. When something goes wrong (and it will), you need to trace the decision chain across agents. Think distributed tracing for microservices, but for reasoning.

Then failure modes and graceful degradation. What happens when Agent B is down? Does Agent A queue work, fall back to a simpler approach, or alert a human? Design this before production.

Version control for agent behavior matters too. Your agents are making decisions based on prompts, models, tools, and memory. When you update one agent, can you roll back if it misbehaves? Can you A/B test agent versions?

Finally, drift detection. Agent outputs can shift over time due to model updates, data changes, or subtle prompt drift. Set up automated checks: “Is this agent’s output distribution similar to last week’s?”

Quick Wins: Where to Start Without Drowning

You don’t need to boil the ocean. Here’s what works for teams getting started.

Pick a contained domain with clear hand-offs (a.k.a one small use-case, solving a particular problem). Good candidates include pipeline testing automation (one agent generates tests, another reviews them, a third runs them), transport logistics (route planning agent plus maintenance scheduling agent plus cost optimization agent), and multi-step talent workflows (resume screening agent plus interview scheduling agent plus reference check agent). Bad first projects are things like “automate all of customer service” (too broad) or “general reasoning assistant” (no clear specialization).

Design for measurement from day one. This is where most projects fail. They build something cool and then scramble to prove it was worth it. Before you write a line of code, define time saved (how long did this process take humans before and after), error reduction (what’s the error rate before and after agents), hand-offs eliminated (how many times did work sit in a queue or get passed between people), and cost per transaction (what did this cost before versus after). Pick the metric that your leadership actually cares about. Then instrument your agents to measure it continuously.

Start with the coordinator pattern. Your first multi-agent system should have a coordinator agent at the top, worker agents below it (search, analyze, report), and a shared memory or knowledge store at the bottom. The coordinator knows the workflow. Workers know their specialization. Shared memory ensures consistency. Simple, debuggable, and it scales to 80% of use cases.

What to Watch: The Next 12-18 Months

Frameworks are maturing fast. AWS, Microsoft, and others are releasing orchestration SDKs that handle a lot of the plumbing. You’ll spend less time building infrastructure and more time designing agent interactions. Pay attention - these tools will shape how we think about multi-agent patterns.

Governance is becoming non-negotiable. Regulators are waking up to the idea that agents making autonomous decisions need audit trails, approval workflows, and kill switches. If you’re in healthcare, finance, or government, bake this in from day one. Even if you’re not in a regulated industry, your customers will start asking about it.

Early traction industries include supply chain (agents for demand forecasting plus inventory optimization plus logistics routing are already live at major retailers), IT operations (SRE teams using agents for incident detection plus root cause analysis plus remediation), pharma (drug discovery pipelines with specialist agents for literature review plus molecule generation plus safety prediction), and transport maintenance (predictive maintenance agents plus scheduling agents plus parts inventory agents, and this one’s close to home for me - I’m seeing real results in fleet management).

Can you see the pattern?

These are industries with complex workflows, high coordination costs, and clear metrics.

Let’s Talk - Reach Out

I’m curious where you’re at with this. Reply and tell me what’s one agentic architecture you’re working on or stuck on right now. Doesn’t have to be multi-agent. Solo agents count too.

If you had to pick one metric to prove your agents are delivering value, what would it be? Time saved? Cost reduction? Quality improvement? Error rate?

And if you’re feeling ambitious: What’s the biggest operational challenge you’re facing with agents in production?

Before You Leave

Remember: multi-agent systems are powerful, but they’re not magic. They’re distributed systems that happen to reason. Treat them with the same engineering discipline you’d give any distributed system, and they’ll reward you.

If you’re building in the weeds and hitting walls, reach out. I’ve debugged enough agent systems to have strong opinions on what breaks and what doesn’t.

Happy to help unstick you.

Evolving the Newsletter

This newsletter is more than updates - it is our shared notebook. I want it to reflect what you find most valuable: insights, playbooks, diagrams, or maybe even member spotlights.

👉 Drop me a note, comment, or share your suggestion anytime.

Your feedback will shape how this evolves.

Found this useful? Ask your friends to join.

We have so much planned for the community - can’t wait to share more soon.